Human Labor is the New Steam Engine

The existing enterprises based on human labor and their supporting structures resemble remarkably the group drive system in the electrification revolution. The use of AI of today is just merely swapping the "steam engines" for "electric motors". Shall history rhyme again, we should see new enterprises and social structures emerge, which will be the new "unit drive" paradigm shift.

After reading The State of Enterprise AI report by OpenAI, an idea is stuck with me. The idea is interesting but also merciless in a way: Human labor in existing enterprises are just the “steam engine” in the electrification revolution. Another leap forward is not about AI replacing human labor, which will happen anyway, but about reforming everything about enterprises and their supporting structures to enbrace a new electricity - the abundance of intelligence created by AIs.

A bit of Prelude

The following is a summary, generated by Gemini 3 with a focus on restructuring, of the influential 1990 paper The Dynamo and the Computer by Paul David:

Paul David’s 1990 paper, “The Dynamo and the Computer: An Historical Perspective on the Modern Productivity Paradox,” explains why computers weren’t showing productivity gains by the late 1980s, drawing a historical parallel to electrification. The central insight is that general purpose technologies require fundamental organizational restructuring before they deliver productivity improvements, not just technological adoption.

The Restructuring Lesson from Electrification

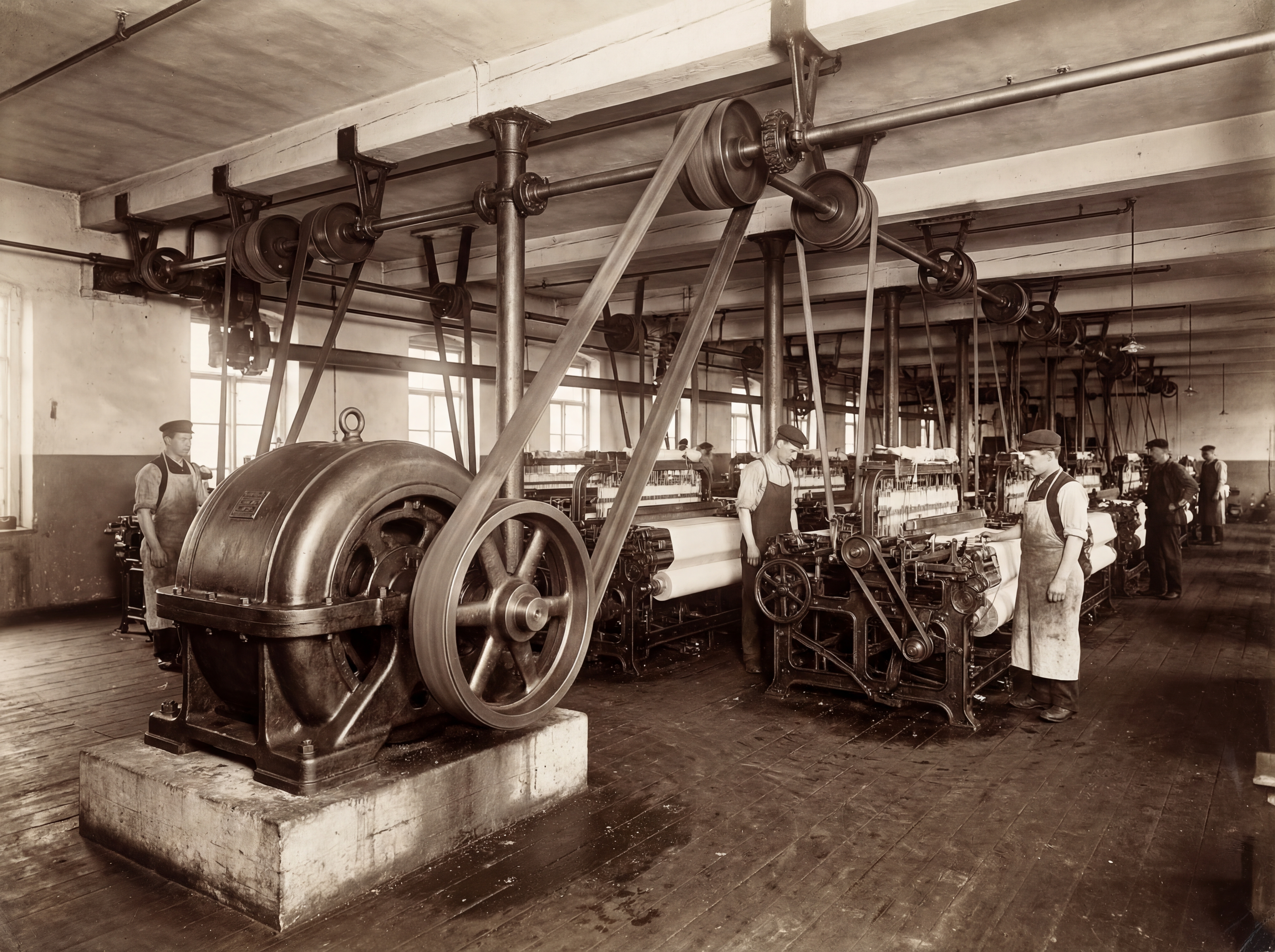

David observed that the electric dynamo, established by the 1890s, didn’t produce a productivity surge in US manufacturing until the 1920s—a four-decade delay. The critical insight was that early factories simply replaced steam engines with large electric motors in a “group drive” configuration, maintaining the same factory layouts and production processes. This superficial adoption yielded minimal efficiency gains.

Real productivity only emerged when factories completely redesigned themselves around “unit drive”—small individual motors for each machine. This enabled single-story buildings, flexible workflow arrangements, elimination of dangerous overhead belt systems, and fundamentally different manufacturing processes. The technology was the same, but the organizational structure had to be reinvented to unlock its value.

Application to Computers

David argued that in 1990, businesses were making the same mistake with computers: overlaying them onto existing processes rather than restructuring work fundamentally. He predicted that significant productivity gains would only materialize once organizations completely redesigned their operations to leverage digital capabilities, just as factories had to be rebuilt around electricity decades earlier. The “productivity paradox” was thus a temporary measurement problem reflecting the natural lag between technology adoption and the organizational learning required to use it effectively.

Machines Made of Human Labor

Despite workers who do physical labor, workers in offices are also analogous to mechanical components in an enterprise. Think about it. Transmission systems in real machines have hierarchies (of gears, shafts, and pulleys), so do enterprises. The components of such business machines are made of humans. Human labor and intelligence are distributed in an entreprise in a centralized way. Human labor (and intelligence), in an abstract sense, is the steam engine of an enterprise while managers, rules and bureaucracies are the “transmission” for it. A significant portion of our social structures are built around human labor. These social structures (conventions, laws, etc.) support the engine and prevent it from being misused or abused. For example, worker wellfare, labor laws and worker unions. Even VCs talk about it, and they say “all problems are human problems”.

AI as a Turbo Charger and as a New Engine

Now everyone is talking about AI, AI taking over jobs, AI taking over businesses, AI taking over everything. Some pragmatists say AI can boost the productivity of human labor, but not replace it. That’s reasonable. And indeed, in the report, it mentioned that

Enterprise users report saving 40–60 minutes per day

When I read this, I feel a bit strange. Why only 40–60 minutes per day? I was expecting something like 2x boost, which means hours of productivity saved per day. But I think I knew the answer already - the people components. By people components, I mean people and the structures around them. For example, despite AI is all around, I still need to read files that are hidden deep on a website, fill out forms that even myself cannot understand and go through bureaucracies that are designed to be as complex as possible. This is not a rant, but an example.

Moreover, even if AI helped me with a lot of stuff, I still need to take time to review the results and honestly I’m lazy to do that, so I am the limiting factor. AIs can read and write pages in minutes, but I can’t review them in minutes.

These facts keep reminding me of the friction in the machines made of human labor. We now call AIs copilots, but if we take the human labor engine analogy seriously, the use of copilots is merely installing a turbo charger to the human labor engine. It can of course boost the engine, but much of the boost gets lost in the “transmission” of the machine.

Even though some companies are replacing human labor with AIs, this is essentially installing a new “electric motor” to the machine. There needs to be a new “unit drive” paradigm shift, but all 2B AI companies are competing to install more turbo chargers or new electric motors for the factories that are steam-powered by human labor.

Implications and the Future

History does not repeat itself but it rhymes. We’ve seen gigafactories of AI and probably they will remain, just like power plants. Tokens and compute will be infrastructural resources, just like electricity. If we take the analogy further, what will happen when a new “unit drive” paradigm shift happens? A few implications are clear:

Everyone is an individual entrepreneur and enterprise: There will not be any more “group drive” structures built for human labor. The labor and intelligence resources will not be distributed centrally inside a company, no more group drive of labor and intelligence. Instead, everyone is an independent enterprise, equipped with “electric motors” (that are AIs), which is the true new unit drive paradigm.

Universal Basic Income and Token Care: When there’s no good-old enterprises, UBI is inevitable to sustain individual enterprenuers. Access to tokens will be a new human right, just like access to electricity and Internet. The price of tokens will continue to decrease until it’s asymtotically close to the cost of electricity due to mass production.

2B will be 2C: When there’s no giant enterprises, selling services to enterprises equals selling services to individuals. We’ve seen such trends in “To Pro Users” services.

Open technical and social protocols prevail: There will not be all-in-one proprietary solutions or applications anymore. Every enterprise and everyone build their own “electric motors” based on open-source solutions, templates and protocols.

Interesting details

If the above implications seems too general, I have a couple of near-term predictions after rethinking agent development in my recent project:

- A new type of personal computer (or at least a new type of filesystem) will emerge to suit the needs of human collaboration with agents.

- Agentic CI/CD will be the first step towards automation that has no involvement (even minimal monitoring) of humans.

Here’s a bit of background: I have quite a few data visualizations that are tailored for desktop browsers, but I want to make them all mobile friendly. This conversion is not trivial, although it seems so. The conversion agent or agents need to know/extract the data behind a visualization, guess the intention from the original visualization (like what information to preserve, present or even emphasize), and finally generate the mobile-friendly visualization that must ensure the “same” information is presented in a readable and friendly way on mobile devices.

My first attempt was to use Google Antigravity since it enables agents to see the converted mobile visualization in the browser, so that they can correct any UX mistakes. However, as an agent needed to go through many steps (like, read the original visualization on a desktop browser and a mobile browser, read the original code, extract the data, implement the mobile visualization and check it on the mobile browser), it sometimes missed some key steps and made mistakes. This was expected, so I would give some reminders to the agent. The result was not bad, in fact, way beyond my expectations to my surprise. However, I knew that this was not scalable, as I had many visualizations to convert and little time to monitor the agent.

Then, I decided to go for multi-agents: one agent is the planner, one is the data extractor, one is the implementor and the last one is the reviewer. The one library that came to my mind was LangChain, but honestly even though I have not written multi-agent implementations for a long long time (~10 months!), I still remember how it sucked. I also read its documentation and found a new shining library called DeepAgent, which honestly seemed nice. However, I just could not convince myself to use it. As I have many visualizations, meaning many small projects that need to be handled by agents, I needed to provide many clean workspaces for them to work on, but DeepAgent seems to not support an easy way to spawn many folder-based workspaces. Besides, I started headache when I thought about how to manage the interactions between these four agents. What messages to send to each other, what context to share, what tools to use, how to manage feedback loops in each agent, etc.

I just wished to have an agent with zero-setup, which can use all the tools on my computer, do the work and check the results itself. Wait, in fact, I have not one but multiple already: codex, gemini-cli and claude code! Then I decided to just go for a two-agent setup:

- The planner is actually just a workflow. A python script renders the original visualization on a desktop browser and a mobile browser. No need for an agent to open two different browsers with flaky tool use. The rendered results with the original code and other text requirements are sent to Gemini 3 Pro, which will reliably generate a detailed implementation plan.

- For each visualization, a clean JS project with necessary dependencies and scaffolding code is generated from a template. The detailed plan is saved as a text file in the project, along with the original visualization renderings and the original code. One desktop visualization -> One clean JS project

- The second agent is just

gemini-cli, which reads the plan and does whatever it needs to do to implement the plan.

The only minor problem is that multiple node-modules in many projects take up a lot of space. But it’s a reasonable price to pay, since now the only script I need to write is just:

# pseudo code

for each desktop visualization:

render the visualization on a desktop browser and a mobile browser

run a Python script to generate a detailed implementation plan

copy the files in the template to create a new project

save the plan to the project

configure a playwright MCP for gemini-cli to use

run gemini-cli to implement the plan and ask it to review the results itself

No context management, no messaging whatsoever. Just run the script and wait for the results.

When I took the shower when waiting for the results, I realized that this is quite similar to CI/CD pipelines, except that the pipeline is composed of agents instead of scripts, run on my computer instead of a remote server, version-controlled by folders instead of git repositories. This is elegant in a brute-force sense and ugly in a dumb sense. But, let’s take a step back and ask: why did I have to do this?

First of all, I, as a human, had become a limiting factor in my grand project. I simply did not have enough time to do everything myself or even monitor the agents. I was and still am not scalable. On the technical side, PCs (also known as “personal computers”) are not scalable either. They are designed for single users and not for multiple users. For example, we cannot spawn multiple folders easily, since it did not make sense when the only user is me. Even the holy git, the distributed version control system, is not designed for multiple users on one computer. For example, in a repository, we cannot work on multiple branches at the same time because this did not make sense. However, as of today, the number of users on one computer working on the same project, in the same directory, or in the same workspace is no longer 1.

When we work with multiple agents, we need a new filesystem or git to support simultaneous parallel universes of work. Docker may be a solution, but come on, we deserve better.

When we need to monitor the agents, ourselves become the new mainframe, since our attention and time are just limited and thus must be multiplexed. You see, previously, every job or every piece of work gets assigned to a human. With automation, some pieces of work can be completed under the supervision/control of a human. But what if most of work no longer needs be assigned to a human controller/monitor? That, to me, will be another leap like the one from mainframe to personal computer before.

Metadata

Version: 0.2.0

Date: 2025-12-09

License: CC BY-SA 4.0

Changelog

2025-12-26: Added interesting details